Best Big Data Processing And Distribution Systems - Page 8

Big data processing and distribution systems offer a way to collect, distribute, store, and manage massive, unstructured data sets in real time. These solutions provide a simple way to process and distribute data amongst parallel computing clusters in an organized fashion. Built for scale, these products are created to run on hundreds or thousands of machines simultaneously, each providing local computation and storage capabilities. Big data processing and distribution systems provide a level of simplicity to the common business problem of data collection at a massive scale and are most often used by companies that need to organize an exorbitant amount of data. Many of these products offer a distribution that runs on top of the open-source big data clustering tool Hadoop.

Companies commonly have a dedicated administrator for managing big data clusters. The role requires in-depth knowledge of database administration, data extraction, and writing host system scripting languages. Administrator responsibilities often include implementation of data storage, performance upkeep, maintenance, security, and pulling the data sets. Businesses often use big data analytics tools to then prepare, manipulate, and model the data collected by these systems.

To qualify for inclusion in the Big Data Processing And Distribution Systems category, a product must:

Featured Big Data Processing And Distribution Systems At A Glance

G2 takes pride in showing unbiased reviews on user satisfaction in our ratings and reports. We do not allow paid placements in any of our ratings, rankings, or reports. Learn about our scoring methodologies.

A weekly snapshot of rising stars, new launches, and what everyone's buzzing about.

This description is provided by the seller.

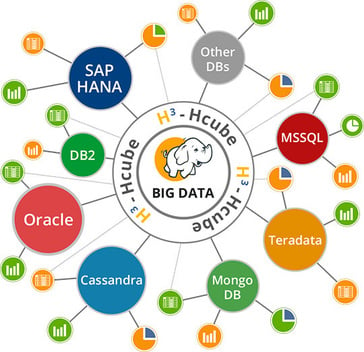

HCube is a Hortonworks certified and multi-functional data ingestion and analytics solution.

This description is provided by the seller.

This description is provided by the seller.

The Infoworks Autonomous Data Engine automates data engineering for end-to-end big data workflow processes from ingestion all the way to consumption, helping customers implement to production in days using 5x fewer people.

This description is provided by the seller.

This is how G2 Deals can help you:

- Easily shop for curated – and trusted – software

- Own your own software buying journey

- Discover exclusive deals on software

This description is provided by the seller.

Inquire is a software development kit that performs fuzzy text-based filtering, searching, matching, and linking functions towards discovery of useful information in identity data.

This description is provided by the seller.

This description is provided by the seller.

Iotics’ revolutionary digital twin technology allows for communication across the entire digital ecosystem of your assets. Whether it’s people, places, processes, or things, Iotics bridges the gap between each of them through our unique data mesh technology, completely overriding the limits of organizational boundaries or differing data languages without sacrificing security. The sky's the limit in regard to confidently sharing data internally, externally, and across platforms. Iotics allows for an exchange of data between the smallest sensors to the largest power stations; from a single running train to an entire network of airplanes. In short, there is no limit to what Iotics can connect. In the world of Iotics, enterprises, communities, and even entire cities become digitally enabled and therefore capable of communicating with each other as unique data sources. Iotics weaves a web between these sources, turning them into discoverable, interactive assets.

This description is provided by the seller.

This description is provided by the seller.

Integrate, Federate, Migrate, Populate, Accelerate

This description is provided by the seller.

This description is provided by the seller.

Onehouse's Lakehouse Table Optimizer is a fully managed service designed to enhance the performance and cost-efficiency of data lakehouse environments. By automating critical configurations such as clustering, compaction, and data cleaning, it ensures optimal read and write operations without the need for manual intervention. This solution supports platforms like Apache Hudi™, Apache Iceberg, and Delta Lake, providing seamless integration and hands-free management.

This description is provided by the seller.

This description is provided by the seller.

LakeView is a free observability tool designed to enhance the management and optimization of data lakehouse environments, particularly those utilizing Apache Hudi. By providing comprehensive insights into table performance and health, LakeView empowers data engineers to monitor, debug, and optimize their data operations effectively. Its user-friendly interface offers interactive charts and metrics, enabling quick assessments and proactive issue resolution without accessing base data files, thereby ensuring data privacy.

This description is provided by the seller.

This description is provided by the seller.

Lentiq is a multi-cloud, production-scale data lake as a service with a fully distributed architecture made of interconnected data pools. The data pools are completely independent, decentralized, and can run on different cloud providers, but they communicate through data, code and knowledge sharing, which helps deliver high-quality results for data science projects. Lentiq’s greatest benefit is allowing data teams (data scientists, data engineers, software developers, data operations or business analysts) to leverage the best tools and skillsets available for the job. In comparison to conventional data lake design patterns, with Lentiq, governance rules apply only when data is shared.

This description is provided by the seller.

This description is provided by the seller.

Market Locator is a big data monetization solution for telcos. This data monetization platform helps major telcos monetize their big data and provide it to internal marketing as well as 3rd party businesses and the public sector for location intelligence, targeted marketing and KYC / risk scoring. Thanks to the unique approach & architecture it allows telcos to unlock the value they believe to have in data, and to do so in a customer fair & GDPR-like regulation compliant way. Tested and proven on several markets with world-class telcos such as Slovak Telekom (Deutsche Telekom Group), Orange, O2 or STC. Delivered on a SaaS license fee model. Get the most out of your data!

This description is provided by the seller.

This description is provided by the seller.

The low code Megaladata platform empowers business users by making advanced analytics accessible. - Visual design of complex data analysis models with no involvement of the IT department and no need for programming. - Over 60 ready-to-use processing components. - Easy integration with various sources. - Fast processing of large datasets achieved through in-memory computing and parallelism. - Reusable components that facilitate accumulation of business expertise. - Advanced visualization — OLAP cubes, tables, charts, and other specialized tools. Megaladata minimizes the time between hypothesis testing and a fully functional business process.

This description is provided by the seller.

This description is provided by the seller.

MPS IntelliVector is a data extraction and process automation solution tailored for the financial, insurance and government sector.

This description is provided by the seller.

This description is provided by the seller.

Observo Data Lake is an AI-powered observability platform designed to help organizations optimize their observability data, significantly reduce costs, and enhance incident response times. By leveraging advanced AI and machine learning models, Observo Data Lake enables businesses to streamline their data management processes, ensuring efficient and cost-effective operations.

This description is provided by the seller.

This description is provided by the seller.

Increased automation & flexibility - Ramp up your Revenue through higher efficiency! Easily extend your datacenter capacity by shifting load to Amazon AWS EC2, Azure, etc. openQRM Enterprise - Enterprise Edition.

This description is provided by the seller.

This description is provided by the seller.

Phizzle's phz.io solution provides a fast, flexible way for brands to gather, analyze, and act on customer-generated data at massive scale.

This description is provided by the seller.

This description is provided by the seller.

RisingWave is an open-source distributed SQL streaming database designed for the cloud.It is designed to reduce the complexity and cost of building real-time applications. RisingWave consumes streaming data, performs incremental computations when new data comes in, and updates results dynamically. As a database system, RisingWave maintains results in its own storage so that users can access data efficiently. For more details about RisingWave, see https://risingwave.com/.

This description is provided by the seller.

Frequently asked questions about Big Data Processing And Distribution Systems

To assess the ROI of investing in Big Data Processing software, consider factors such as improved data handling efficiency, cost savings from automation, and enhanced decision-making capabilities. User reviews indicate that platforms like Apache Spark and Apache Kafka significantly reduce processing times, with users reporting up to 50% faster data analysis. Additionally, tools like Snowflake and Google BigQuery are noted for their scalability, which can lead to lower operational costs as data needs grow. Evaluating these metrics against your current costs will help quantify potential ROI.

Implementation timelines for Big Data Processing and Distribution tools vary significantly. For instance, Apache Kafka users report an average implementation time of 3 to 6 months, while Snowflake users typically see timelines of 1 to 3 months. Databricks users often experience a range of 2 to 4 months for full deployment. In contrast, Amazon EMR implementations can take anywhere from 1 month to over 6 months, depending on the complexity of the use case. Overall, most users indicate that timelines can be influenced by factors such as team expertise and project scope.

Deployment options significantly influence Big Data Processing solutions by affecting scalability, performance, and cost. For instance, cloud-based solutions like Snowflake and Amazon EMR are favored for their flexibility and ease of scaling, with users noting improved performance in handling large datasets. On-premises solutions, such as Apache Hadoop, offer greater control and security but may involve higher upfront costs and maintenance efforts. Users often highlight that hybrid deployments provide a balance, allowing for optimized resource allocation and enhanced data governance.

Essential security features in Big Data Processing tools include data encryption, user authentication, access controls, and audit logs. Tools like Apache Hadoop and Apache Spark emphasize strong encryption protocols and role-based access controls, ensuring that sensitive data is protected. Additionally, platforms such as Google BigQuery and Amazon EMR provide comprehensive logging and monitoring capabilities to track data access and modifications, enhancing overall security. User reviews highlight the importance of these features in maintaining data integrity and compliance with regulations.

To evaluate the performance of Big Data Processing solutions, consider key metrics such as processing speed, scalability, and ease of integration. User reviews highlight that Apache Spark excels in processing speed with a rating of 4.5, while Hadoop is noted for its scalability, receiving a 4.3 rating. Additionally, solutions like Google BigQuery are praised for ease of use, achieving a 4.6 rating. Analyzing these aspects alongside user feedback on reliability and support can provide a comprehensive view of each solution's performance.

Customer support in the Big Data Processing and Distribution category typically includes options such as 24/7 support, live chat, and extensive documentation. For instance, products like Apache Kafka and Snowflake are noted for their strong community support and comprehensive online resources, while Cloudera offers dedicated account management and personalized support. Additionally, many vendors provide training sessions and user forums to enhance customer engagement and troubleshooting capabilities.

User experiences among top Big Data Processing tools vary significantly. Apache Spark leads with high satisfaction ratings, particularly for its speed and scalability, receiving an average rating of 4.5/5. Hadoop follows closely, praised for its robust ecosystem but noted for a steeper learning curve, averaging 4.2/5. Databricks is favored for its collaborative features and ease of use, achieving a 4.6/5 rating. In contrast, AWS Glue, while effective for ETL processes, has mixed reviews regarding its complexity, averaging 4.0/5. Overall, users prioritize speed, ease of use, and support when evaluating these tools.

Common use cases for Big Data Processing and Distribution include real-time data analytics, where businesses analyze streaming data for immediate insights, and data warehousing, which involves storing large volumes of structured and unstructured data for reporting and analysis. Additionally, organizations utilize big data for predictive analytics to forecast trends and customer behavior, as well as for machine learning applications that require processing vast datasets to train algorithms. These use cases are supported by user feedback highlighting the importance of scalability and performance in handling large data sets.

The leading Big Data Processing platforms demonstrate strong scalability features. Apache Spark is highly rated for its ability to handle large-scale data processing with a user satisfaction score of 88%, emphasizing its performance in distributed computing. Amazon EMR also scores well, with users appreciating its seamless scaling capabilities, particularly in cloud environments. Google BigQuery is noted for its serverless architecture, allowing users to scale without managing infrastructure, achieving a satisfaction score of 90%. Overall, these platforms are recognized for their robust scalability, catering to varying data processing needs.

For Big Data Processing needs, consider integrations with Apache Hadoop, Apache Spark, and Amazon EMR. Users frequently highlight Apache Hadoop for its robust ecosystem and scalability, while Apache Spark is praised for its speed and ease of use. Amazon EMR is noted for its seamless integration with AWS services, enhancing data processing capabilities. Additionally, look into integrations with data visualization tools like Tableau and Power BI, which are commonly mentioned for their ability to provide insights from processed data.

Pricing models for Big Data Processing solutions vary significantly. For instance, Apache Spark offers a free open-source model, while Databricks employs a subscription-based model with tiered pricing based on usage. Cloudera provides a flexible pricing structure that includes both subscription and usage-based options. AWS Glue operates on a pay-as-you-go model, charging based on the resources consumed. In contrast, Google BigQuery uses a per-query pricing model, which can lead to variable costs depending on usage patterns. These diverse models cater to different organizational needs and budgets.

Key features to look for in Big Data Processing tools include scalability, which allows handling increasing data volumes; real-time processing capabilities for immediate insights; robust data integration options to connect various data sources; user-friendly interfaces for ease of use; and strong security measures to protect sensitive information. Additionally, support for machine learning and advanced analytics is crucial for deriving actionable insights from large datasets. Tools like Apache Spark, Apache Hadoop, and Google BigQuery are noted for excelling in these areas.